Telescope has been suffering from blurry vision.

But a team of Australian researchers created an AI algorithm that fixes the problem — a major relief for the scientific community, which hopes to use the instrument to search for exoplanets around stars in our Milky Way galaxy.

The affected instrument is the Aperture Masking Interferometer (API), designed and built by a team of astronomers led by Professor Peter Tuthill from the University of Sydney in Australia. API is not one of the main four instruments on the James Webb Space Telescope (JWST) but a device that enables a special type of imaging on one of the observatory’s main instruments — the Near-InfraRed Imager and Slitless Spectrograph (NIRISS).

API allows NIRISS to combine light from different sections of the telescope’s main mirror to increase the instrument’s sensitivity and resolution. The API component, consisting of an opaque mask with seven holes, was specifically designed to look for small and dim exoplanets around distant stars. But when astronomers first turned on the instrument, they found the images were coming back blurry.

The issue was reminiscent of a major flaw in the optics of Webb’s predecessor, the Hubble Space Telescope, which was shown to be noticeably near-sighted after reaching orbit in 1990. Hubble’s defect stemmed from imperfections in its primary mirror, and the fix required a crewed space mission costing hundreds of millions of dollars. In 1993, a team of astronauts mounted a set of corrective mirrors in front of the telescope’s sensors to allow it to produce images of the expected quality.

For Webb, however, such a mission is out of the question. Hubble orbits about 320 miles (515 kilometers) above Earth, barely 70 miles (110 km) above the International Space Station. Webb, however, accompanies the planet at a distance of 930,000 miles (1.5 million km), which is more than three times farther away from Earth than the moon. No human space mission has ever flown that far.

The blurriness in Webb’s API images was traced to electronic distortions arising on Webb’s infrared camera detector.

To fix the problem, former University of Sydney Ph.D. students Max Charles and Louis Desdoigts developed a neural network, a type of AI algorithm inspired by the functioning of the human brain, that detects and corrects the pixels affected by the electrical charges distorting the observations.

The algorithm, called AMIGO (for Aperture Masking Interferometry Generative Observations), has shown to work remarkably well.

“Instead of sending astronauts to bolt on new parts, they managed to fix things with code,” Tuthill said in a statement.

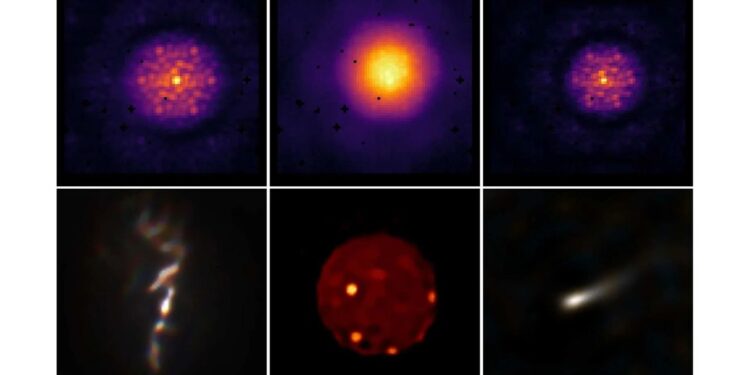

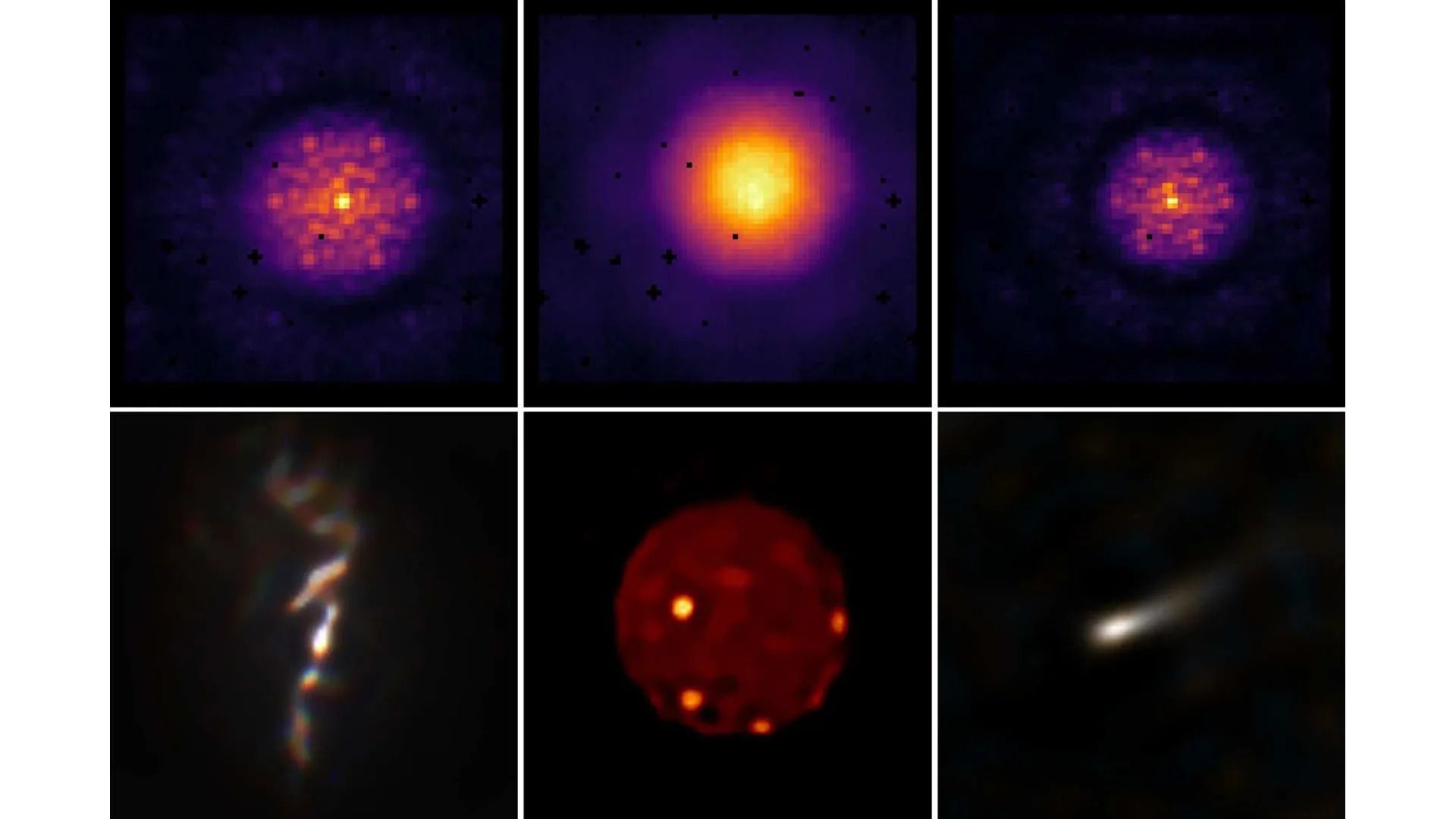

The researchers demonstrated AMIGO’s sharpening skills on images of a dim exoplanet and a very cool, low-mass star (a red-brown dwarf) some 133 light-years from Earth. In another imaging campaign, API, assisted by AMIGO, was able to produce detailed images of a black hole jet, the volcanic surface of Jupiter’s moon Io, and stellar winds emanating from a distant variable star.

“This work brings JWST’s vision into even sharper focus,” Desdoigts, who is now a postdoctoral researcher at Leiden University in the Netherlands, said in the statement. “It’s incredibly rewarding to see a software solution extend the telescope’s scientific reach.”

The James Webb Space Telescope, operational since July 2022, has revolutionized astronomy, revealing unexpected details about the formation of early galaxies and black holes. It has also made significant contributions to the study of exoplanets, making unprecedented measurements of the composition of their atmospheres. With API at full speed now, Webb is set for even more mind-boggling discoveries.